When deciding whether to use Linode as my kubernetes provider, I needed to ensure that I would have read-write-many volumes available for deployments that need them. So I did a quick search, and the only thing that came up was this guide on setting them up with rook — which is deprecated. Not cool.

On other cloud providers, I created read-write-many volumes using a Network File System — so I wondered if I could do the same on Linode. Answer: yes. And it’s actually pretty easy and works well. Here’s how:

You will need the following:

- A Linode Kubernetes (LKE) cluster,

- A Linode (VM) in the same data center with your cluster (to be added to a VLAN with your cluster),

- A Network File System server running on the Linode VM (very easy to install).

Creating a “Virtual Private Cloud” is now an option in the sidebar of the Linode/Akamai dashboard. I don’t know if a Linode VPC would also work, but I set my system up using a VLAN.

Configuring a VLAN on Linode

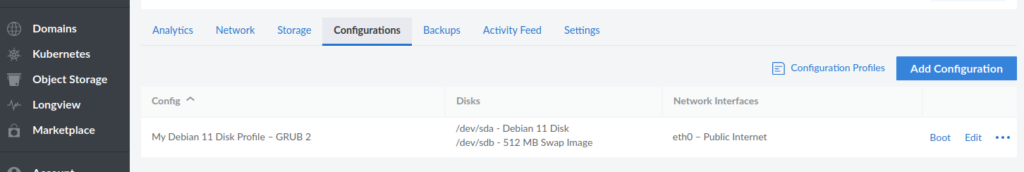

Setting up a VLAN on Linode is easy if you know how to do it, but it is not very intuitive. In the “Linodes” section of the dashboard, go to the “Configurations” section for the Linode you’re planning to use as the NFS, and edit the current configuration:

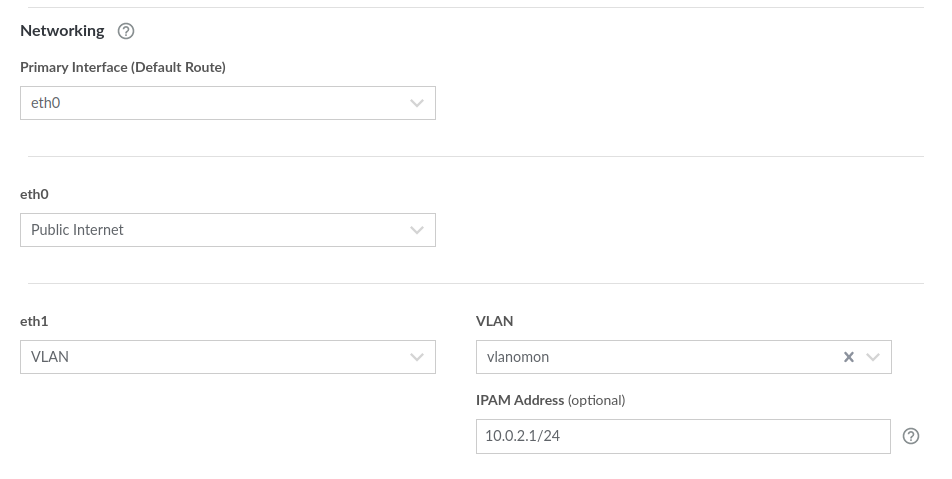

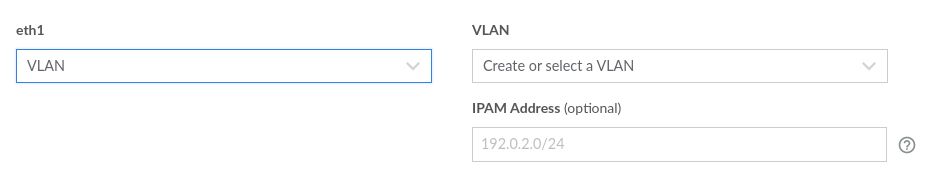

In the edit dialog, for eth1, select “VLAN”:

In the “VLAN” field, define a name for your VLAN (I named mine “vlanomon”), and select an IPAM address.

Weirdly, I found that the example IPAM Address that is shown greyed-out in the GUI (192.0.2.0/24) did not work for me. I think the 192 range is already in use for giving the linodes private IP addresses within the data center. However, other examples of setting up a VLAN on Linode used the 10 range, so I tried that and it worked. So, for example, 10.0.2.1/24 will work just fine.

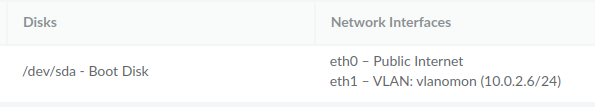

Do the same for all of the Linodes that make up the nodes of your LKE cluster, selecting the same VLAN name and, giving them other compatible IPAM addresses (e.g. 10.0.2.3/24, etc.)

I found that the linodes had to be rebooted before the VLAN became active, and it needed be done in a particular way:

- Do: reboot from the “Reboot” button in the LInode/Akamai dashboard,

- Don’t: reboot from the command-line in the linode VM itself (doing this after editing the network configuration messed up my linode’s networking completely),

- Don’t: “Recycle” your node in the Kubernetes section of the dashboard (this will replace the linode, hence undo the changes to the networking).

After this is done, your linodes can communicate with each other freely and securely on their new internal IP addresses.

Note that it is not technically necessary to connect your linodes with a VLAN. All linodes within a given data center can communicate with each other on addresses that are internal to the data center. However, this is not secure since other users in the same data center can potentially listen in on your communications.

Configuring the NFS

It’s easy to find instructions for how to install an NFS server on Linux. Any instructions will do. Personally, I made an ansible script to do it, but for clarity and completeness I will write out the commands here for a debian system:

Install the relevant package:

sudo apt update && sudo apt install nfs-kernel-server

Create the directory that you plan to use as the volume to be mounted:

sudo mkdir /export

Update the /etc/exports file to allow the other nodes to mount this volume from their internal VLAN addresses. Basically, it should contain a line that looks like this:

/export 10.0.2.0/24(rw,sync,no_subtree_check)

In the above line, naturally 10.0.2.0/24 is the CIDR used by the VLAN. You might want to review the various export options (in parentheses) to select which ones you’d like to use.

Aside: A popular export option that I didn’t include in the line above is no_root_squash. This option is very convenient when using the NFS for kubernetes volumes because if the pod’s process is running as root, then it has the privileges of root for the volume also. Unfortunately, this convenience comes with security issues. Following best practices, your pod should not be running as root, and your volume should be configured to squash any root process mounting it. Since you can log onto the linode yourself (and use sudo), you can easily change the ownership of the various subtrees of the export directory to match the user ids of your pods.

Restart the nfs-kernel-server.service:

systemctl restart nfs-kernel-server.service

Re-export the share:

exportfs -rav

In this example, I assume you have installed a firewall with ufw. If so, you need to allow the nfs ports (111 and 2049) to be accessed by the CIDR of your VLAN via TCP and UDP. After adding the rules, your rule list should contain the following:

$ sudo ufw show listeningtcp:

111 * (rpcbind)

[ 2] allow from 10.0.2.0/24 to any port 111 proto tcp

2049 * (-)

[ 4] allow from 10.0.2.0/24 to any port 2049 proto tcp

udp:

111 * (rpcbind)

[ 3] allow from 10.0.2.0/24 to any port 111 proto udp

With this, your NFS server is ready to go.

Mounting the ReadWriteMany volume on a pod

Once the NFS server is available to your cluster, it’s very easy to mount it on a pod. Here’s a very simple example deployment manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: try-nfs

name: try-nfs

spec:

replicas: 2

selector:

matchLabels:

app: try-nfs

template:

metadata:

labels:

app: try-nfs

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- mountPath: /data/

name: data-volume

volumes:

- name: data-volume

nfs:

path: /export/

readOnly: false

server: 10.0.2.1

Naturally the server IP in the above example manifest has to be the same as the IPAM Address configured for the linode VM that is acting as the NFS server.

As you can see, you don’t even need to define a PV or a PVC object to mount an NFS-based volume. You can if you want to — that’s where you’d see that it is configured as ReadWriteMany. But you can simplify your manifests by declaring the NFS volume directly in the deployment manifest, and you’ll see that it behaves as a RWX volume.

And that’s it! For the price of one additional linode, you can enjoy simple and convenient RWX data volumes for your LKE cluster.

Hi and thanks for the useful guide

My question is how this approach with the additional VM compares to installing the NFS in the cluster using a helm chart e.g https://kubernetes-sigs.github.io/nfs-ganesha-server-and-external-provisioner/ … What are the pros and cons? Because I want to avoid the extra cost of the additional VM if possible.

Hi Mohamed, good question!

[tl;dr] : Using the chart you linked to is almost certainly cheaper than adding a whole VM, and it should work as well.

The storage space used by the NFS volume has to come from somewhere, and wherever it comes from, you’ll have to pay for it. I read some of the documentation in your link to find out where the nfs-ganesha-server is getting the storage space from, and it looks like you can configure it, but the default is that claims a standard volume from your cloud provider.

A standard volume costs money, but it’s a lot cheaper than a whole VM. And turning one standard volume into an NFS volume provisioner (that can be used to create unlimited volumes) is cheaper than claiming a new individual volume for every pod that needs storage.

Personally, I like using a separate VM for this because it’s easy to view/access/backup all of the data volumes that my cluster is using. Plus I use my NFS VM for other things.

The one thing I’ve found I have to be careful about when claiming standard volumes is cleanup. Specifically, every time I claim a standard persistent volume, I have to delete it both in the cluster and *in the Linode/Akamai GUI*. Once, for a few months, I was still paying for some volumes that I had deleted on the cluster. They were no longer accessible on the cluster, but they were still billed to my account until I deleted them in the GUI.